The Jury is out: Scaling LLMs will not get us to Real Intelligence. What will?

Get ready for 'The Third Wave of AI'

ChatGPT 5 was the turning point.

Prior to its release, promoters and investors were still clinging to the hope that just scaling LLMs will continue to deliver significant improvements and get us all the way to full human and super-human AI. That dream is dead. Every AI lab has discovered that bigger models aren’t better; in fact they can be worse (ref). Development has now shifted to building orchestrated collections of smaller models optimized for specific applications (ref).

OpenAI, DeepMind, Microsoft and others have substantially reduced public expectations of near-term progress and the value of Gen AI (ref , ref , ref ). The general message is that LLMs are missing some crucial features, capabilities and insights (ref).

The reality is actually worse than that: As Yann LeCun already forcefully stated last year “LLMs are an off-ramp to AGI. A distraction. A dead-end” (ref). LLMs, and statistical systems in general, have severe inherent limitations that prevent them from achieving real intelligence. Apart from unavoidable hallucinations, lack of reliability, security risks, plus massive training, configuration and maintenance costs, an even more fundamental problem is that they cannot learn incrementally in real time. In fact they cannot update their expensively trained model at all — it is read only (ref).

This lack of adaptability forces LLM providers to constantly train new models in order to incorporate (relatively) up-to-date (when training started!) information. Not only is this insanely expensive but it also causes serious incompatibilities with existing applications which then need to be repeatedly re-engineered and validated (ref).

External memory such as RAG and input buffers only serve as unreliable band-aids to somewhat mitigate the lack of true real-time learning (updating the core model) (ref).

So if LLMs are an off-ramp, then what’s the on-ramp?

A few years ago DARPA published a model they called ‘The Three Waves of AI’ (ref) referring to:

GOFAI (Good Old-Fashioned AI), mainly logic-based/ expert systems developed in the 80’s and 90’s. This includes IBM Deep Blue Chess champion

Big Data, statistical Neural Networks, including Deep Learning, Generative AI, and LLMs. The systems we see today

Adaptive, cognitive AI that can think, learn and reason like a human — adaptively, conceptually, autonomously, and with limited data and compute

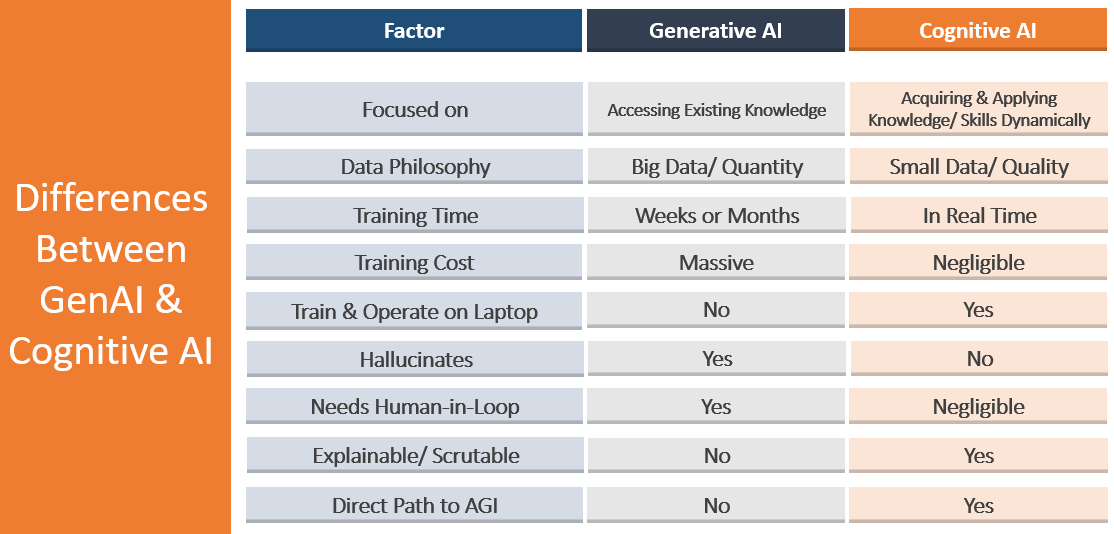

Gen AI and Cognitive AI are like chalk and cheese, like candles versus light bulbs, or like ladders versus rockets to get to the moon.

Gen AI is driven by big data and brute force. Cognitive AI starts from first principles of understanding what makes human intelligence work, what makes it so special. Humans can learn language and reasoning from a few million words, not 30 trillion. Our brains use 20 watts, not 20 Megawatts - they don’t need nuclear power stations.

Cognitive AI is informed not only by computer science but importantly, by philosophy and cognitive psychology. It is defined by autonomous conceptual, contextual learning and reasoning — an appreciation of the importance of real-time concept formation and adaptation, and the power of metacognition. It integrates understanding of learning, cognition and self-awareness to lead to AI that knows what it doesn’t know, what it is doing, and why. Reliable, responsible AI — leading to AGI.

As you can see, there are many advantages to Cognitive AI

It is worth specifically highlighting the significant advantages of real-time incremental, lifelong learning alone.

Given all of these obvious benefits why isn’t everyone working on Cognitive AI? There are many reasons, but a key one is that the required skills are quite scarce in the current crop of AI celebrities. In fact, prominent figures in AI including Demis Hassabis (ref) and Elon Musk have stated that “we don’t understand intelligence” — well, speak for yourselves! The tremendous advances and success of Gen AI over the past decade have sucked all the of oxygen out of the air for alternate approaches. Researchers, engineers, entrepreneurs, and investors who have risen to the top are trained primarily in statistics, logic, mathematics and not in cognitive science.

Are there any practical, commercially proven realization of The Third Wave?

Aigo.ai has been developing and successfully commercializing limited versions of Cognitive AI over the past 15 years. The system is based on a deeply integrated neuro-symbolic architecture called INSA. It features a highly effective common knowledge representation for both neuro-type pattern learning and matching as well as for symbolic-like reasoning. This design overcomes the both the brittleness of traditional symbolic systems and the unreliability and batch learning limitations of neural nets.

Aigo.ai is currently in the process of scaling up the autonomous learning ability of its system.

I love this article by Peter Voss, which perfectly captures a critical point often overlooked in AI development.

The current excessive investment in scaling large language models (LLMs) feels fundamentally flawed bigger is not always better.