A few years ago DARPA formulated a categorization of past, present, and future approaches to AI called ‘The Three Waves of AI’ (ref). Roughly speaking, this refers to:

1st Wave: Traditional symbolic and logic approaches, including expert systems

2nd Wave: Statistical Big Data neural nets, including Generative AI

3rd wave: Autonomous, adaptive Cognitive AI, including Neuro-Symbolic

The last decade has seen a massive shift of focus (and investments) from the first to the second ‘Wave’ as Deep learning (and now Generative AI) have delivered significantly better performance in many areas such as speech and image recognition, translation, semantic search, summarization, language and image generation.

However, it is becoming increasingly clear that these big data, statistical approaches have serious inherent limitations. Most significant among these are poor accuracy and reliability (‘hallucinations’), the inability for them to learn incrementally in real-time, and the lack of meta-cognition (including robust logical reasoning and planning) — i.e. System 2 thinking (ref).

As we strive for true human-level artificial general intelligence (AGI), a new approach is needed — we need the accuracy and expressive power of symbolic systems together with the powerful non-brittle pattern matching ability of neural networks. We also want systems that consume much closer to the 20 watts that our brains need rather than the massive power requirement for training and operating LLMs (ref). Real AGI, just like humans, should also be able to learn language and reasoning with less than 2 millions words instead of needing 10 trillion tokens or more.

‘The Third Wave’ offers a viable path by taking a Cognitive AI perspective to combine symbolic and neural network mechanisms. As ‘proof of concept’, we can point to our brain’s cognitive mechanisms which manage to achieve symbolic reasoning from a purely neural substrate.

In light of these considerations, there has been a recent surge in research and development focused on neuro-symbolic AI approaches (ref). However, almost all of this work entails combining existing statistical systems with existing logic or reasoning engines. Unfortunately, this approach suffers limitations of both technologies — the inability to learn incrementally plus the brittleness of formal logic, in addition to facing the difficulty of connecting symbols to and from the opaque structure of neural nets. Furthermore, it doesn’t address the inherent cost and environmental impact of huge data and compute requirements.

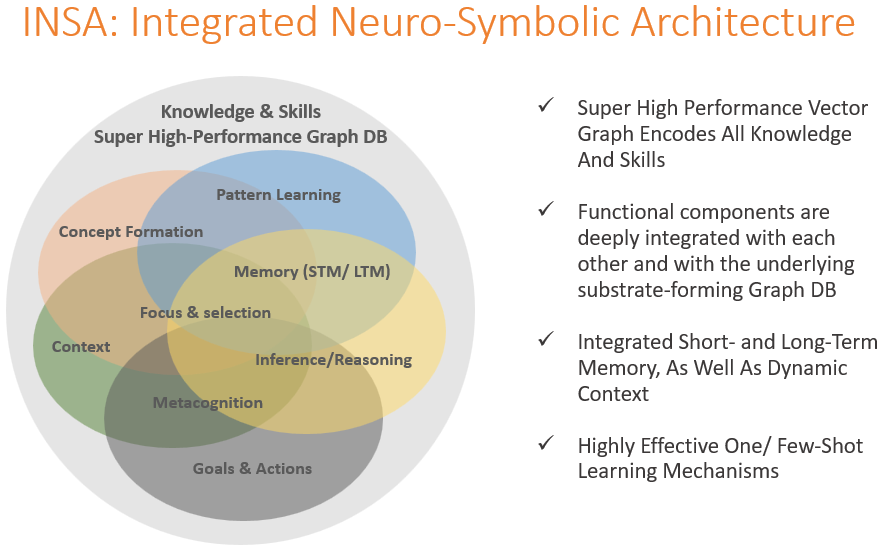

To effectively address these issues we have developed INSA (Integrated Neuro-Symbolic Architecture) which, within one uniform structure, deeply integrates all the mechanisms required by human-level/ human-like intelligence.

INSA’s core substrate is an ultra high-performance vector graph database which encodes all of the system’s knowledge and skills. This knowledge graph is custom designed for this architecture and is 1000 times faster than any commercially available graph database.

Vector node structures in the graph represent perceptual features, complete percepts, concepts, as well as symbols. They also encode context relationships, sequences, hierarchies, and other temporal and spatial constructs, both static and dynamic. This design lends itself well to importing and integrating symbolic and logical data such as ontologies, databases, and unstructured data in real-time. Furthermore, the use of vectors allows for fuzzy pattern matching to overcome brittleness.

Various core cognitive mechanisms such as learning, short-term memory, spreading activation, pattern matching, prediction, generalization, and meta-cognitive signals are deeply integrated with both the graph database, and with each other.

Real-time incremental learning allows the system to acquire new knowledge and skills with one or a few examples, eliminating the need for expensive, GPU-intensive, training. Focusing on quality of data rather than quantity results in much higher accuracy and integrity while eliminating hallucinations.

Implementing this incremental learning and generalization via an adaptive variable-size vector structure allows concepts to be encoded ontologically rather than statistically (based on word adjacency) as is the case with conventional embeddings. This allows for a more grounded meaning representation.

The INSA cognitive core addresses key limitations of both purely statistical and logic-based systems and paves the way to AI with interactive human-level cognitive strength, adaptivity, and agency.

Using a common data structure for both low-level features and as well as symbolic knowledge allows for seamless transitions between direct stimulus to respond operation (System1) and explicit reasoning and planning interventions (system2).

(Implementation details of INSA are proprietary)

All fundamental unsolved problems of AGI are concentrated in two elements of the scheme: 'Concept Formation' and 'Pattern Learning'.

For both, 'neuro-components' involvement in the design does not promise anything new. The search for patterns (and, in particular, the search for cause-and-effect relationships), essentially a combinatorial task, requires the generation of hypotheses with their subsequent testing. Finding a niche here for the useful use of a neural network is at least difficult.

In the task of forming concepts, neural networks can be used as a detector of those predetermined primitive basic features of the observed reality - but this is quite feasible in other ("classical") ways.

Interesting