All you need for Intelligence is... What?

Not attention or scaling or RAG or whatever, but Theory.

The ultimate goal of AI has always been to build machines that can think, learn, and reason like us (and better) — to adapt to changing circumstances in real time-time, and to be able to solve novel problems (more).

Early language applications such as Google Translate didn’t aspire to such lofty goals, but as they scaled from million to billion, and eventually to trillions of training words something changed. At first it seemed that scaling was the answer. But it wasn’t enough. For one, they ran out of data, and in any case it didn’t overcome the inherent limitations of these large (huge!) language models.

Transformers revolutionized LLMs via predictive attention. But we needed more…

More scaling and Reinforcement Learning from Human Feedback (RLHF). Nope…

Custom fine-tuning. No. Prompt engineering. What a pain...

More scaling and Retrieval-Augmented Generation (RAG). No cigar.

Training with Chain of Thought (CoT). Still hallucinating…

Million-token context windows and scaling. Nyet.

Scaling via Mixture of Agents (MoA). A million monkeys can’t produce Hamlet.

Agentic AI (Google Dialog Flow on steroids). Nope, that didn’t quite do it either.

Maybe if we standardize interfaces with Model Context Protocol (MCP)? :(

Now throw some serious compute at the problem with Reinforcement Learning and you end up with what? Expensive hyper-optimized Narrow AI (more).

Ah! We need to scale via world models and more RL (and what a nice revenue source for Nvidia). Another trillion or two should do it — somehow…

No, this is not the road to Real Intelligence

Yes, each of these tweaks have added some functionality — but limited, and at significant, uneconomical implementation and maintenance cost. However, they simply cannot address core limitations (more). Why aren’t these hacks getting us to robust, adaptive, general AI?

Because they are reactive fixes to limitation as they arise — not a fundamental, first principles reassessment as what intelligence requires. The current AI monoculture road-to-nowhere is stumbling blindly around design space instead of being guided by the North Star of understanding what makes human intelligence so special.

Unique aspects of our cognition have allowed us to create science and technology, and to dramatically improve the human condition. These abilities include:

To quickly adapt to changing circumstances given limited time and resources

To dynamically form contextual generalizations and high-level abstractions

To reason conceptually, using self-reflection (more)

The lack of principled design can be summarized as “The 7 Deadly Sins of AGI Design” (more)

On the other hand, the benefits of a real-time, highly adaptive cognitive approach are substantial.

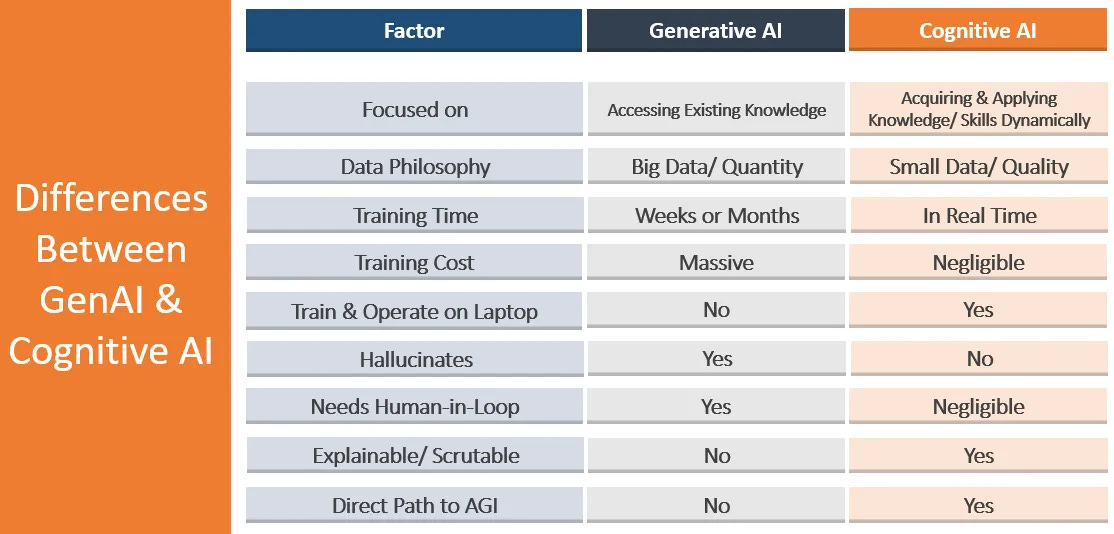

The Cognitive AI approach is nothing like the brute-force statistical process underlying Generative AI — it is much closer to human cognition where a child can learn language and reasoning with a few million words, not trillions, and do that with something like 20 watts, not 20 gigawatts. It couldn’t be more different. Like chalk and cheese.

Near-term commercial and academic reward systems are fueling the current Generative AI monoculture. Benchmaxing, cherrypicked promo stats, and shiny prototypes are continuing to demonstrate progress, but solid commercial value is scarce. These systems are still narrow; not adaptive or general (more).

Alternative approaches based on a more solid theoretical foundation such as Neuro-Symbolic cognitive architectures, will break us out of the current dead-end path, and speed us towards real intelligence (more).

Strong take on the limitations of scaling-first approaches. The cognitive vs statistical framing cuts through alot of the hype. I've been skeptical of RLHF solving alignment issues precisely because it's bolted on rather than fundamental to the architecture. The energy comparison (20 watts vs gigawatts) really hammers home how far we are from biological efficieny. Neuro-symbolic architectures make sense but dunno if the commercial incentives will shift anytime soon.

As you say, all the approaches are by advocates flailing around in desperation, trying all sorts of kludges that haven't and will never work. We can confidently say this based on the fundamentals of the transformer and diffusion model.