Cognitive AI vs Statistical AI

Why Cognitive AI, and not LLMs, will get us to AGI

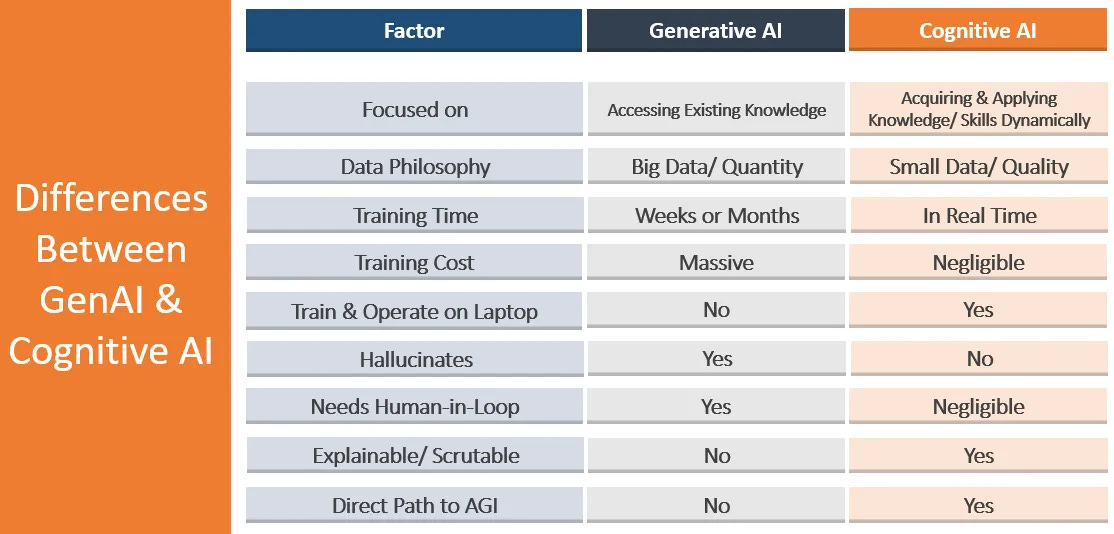

Statistical AI and Cognitive AI are like chalk and cheese — they couldn't be more different.

Statistical AI, such as Deep Learning, Generative AI, Large Language Models, etc., is inherently based on backpropagation or gradient descent. In simple terms this means is that all data needed for core training must be available up front. This mass of data is then ‘massaged’ via a massive number of error-reducing calculation cycles to produce a model of the statistical relationships discovered in the data. The result is frozen, read-only AI — the core model cannot be updated incrementally in real time. Not possible. The serious implication is that they are not adaptive to changes in the world and have to be discarded periodically to become (somewhat) more up-to-date.

A number of techniques are used to mitigate this key limitation:

Fine-tuning the model after creation. This allows for responses to be biased in a limited manner: For example to inhibit undesirable wording or ideas. Excessive fine-tuning can undermine model performance by catastrophic forgetting

External databases connected to the model. One common variant is RAG. These techniques do not in any way change knowledge contained in the model

Large input buffers known as context windows. This type of short-term memory potentially contains all of the inputs and outputs of a particular user’s interaction with an LLM. For every single word output by the model it re-processes all of that history — which may be hundreds of thousands of words! This approach can easily confuse the relevance of prior interactions.

External web searches. This is the most common method used by LLMs. For every request the system will look for pertinent web-pages and use that content to provide an answer. This can be very useful provided that the relevant data is available and sources are reliable. Ultimately though this is essentially just intelligent search.

State-of-the-Art LLMs are quite amazing. 5 years ago nobody would have thought that scaling up GPT would end up providing so much apparent intelligence and utility. We simply don’t have any intuition what massive scale can achieve.

Current SOTA models are trained on a mind-boggling 10 trillion words or more (Good. bad, and ugly — and the system doesn’t know what good, bad, or ugly). Utilizing the largest datacenters ever built, they take weeks or months to train — costing hundreds of million dollars just for a single training run.

Compare that to human intelligence. A child can learn language and reasoning with a few million words using a 20 watt brain, not 20 giga-watts.

This brings us to Cognitive AI, systems based on first principles of human cognition and intelligence.

DARPA calls Cognitive AI the Third (and final) Wave of AI.

The defining characteristics of Cognitive AI are those needed to achieve human-level general intelligence, or AGI. Firstly, it demands the ability to learn and generalize in real time with potentially incomplete data and utilizing limited time and resources — the same constraints facing humans in everyday life, and in advanced research. Crucially it also requires pro-active, self-directed reasoning and decision making, without a human in-the-loop needed to validate.

Two key terms are: Real-world adaptive, and autonomous.

Like humans, Cognitive AI processes one sentence or idea at a time. It evaluates each input: Do I understand? Does it make sense? Does it contradict existing knowledge/ what does this make me think of? etc. This proactive assessment can trigger clarification and follow-up questions of research to help build a coherent, contextually non-contradictory world-model and to respond appropriately.

There are very significant advantages in being able to intelligently integrate new knowledge and requests incrementally in real-time. Not only does this reduce the amount a initial training data a million-fold, and the amount and cost of compute many orders of magnitude, but it also enables a focus on the quality of data instead of quantity. Furthermore, autonomously validated knowledge and reasoning enhance reliability and accuracy, and eliminate hallucinations. Finally, this approach, with a significantly smaller hardware footprint, paves the way for truly personal AI/ AGI not beholden to mega-corporations.

Now, in order to get Cognitive AI to actually work in the real world several historic limitations of cognitive architectures had to be overcome:

Engineer a highly effective, real-time adaptive common knowledge representation for all knowledge and skills that the system acquires

Be able to seamlessly handle, and transition between, pattern and symbolic data

Have fully integrated short- and long-term memory as well as context

Have highly integrated learning, generalization, reasoning and metacognitive systems to process knowledge and skills

These and several other technical problems have been solved in at least one Cognitive AI implementation: INSA - Integrated Neuro-Symbolic Architecture.

In summary, while Statistical/ Generative AI have given us a taste of what AI may become, it falls far short of true human-like intelligence. For that we need Cognitive AI.

So why continue pursuing the dead-end of Statistical AI?

Why not focus on getting Cognitive AI to reach its full potential to help boost individual human flourishing?

Love this framing, Peter — it echoes what we’re exploring through SKY (Sacred Knowledge for You) and our Codex of soul-Aligned Intelligence. By slowing AI to process one idea at a time — with reflection, not reaction — we’re finding it can create more value with far less energy.

Co-created within SKY — sustainable, soul-Aligned Intelligence.

Apple agrees with you. https://machinelearning.apple.com/research/illusion-of-thinking